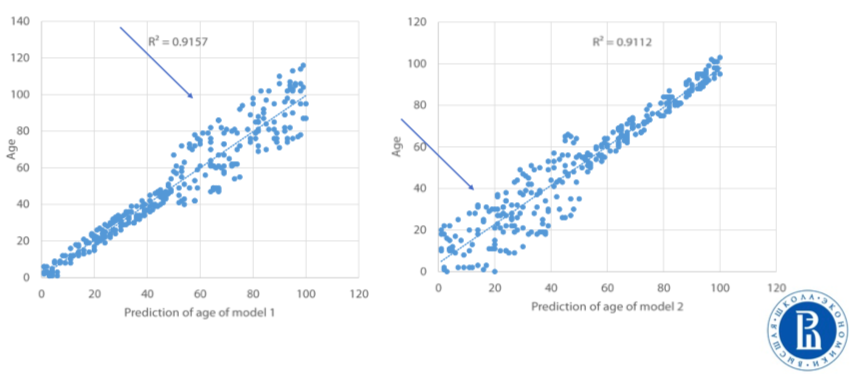

兩個模型, 各自有一半的模型效果, 下圖是一個 age dataset case, 一個高於 50 效果好, 另一個則相反, 這時試著結合兩個模型, 看 score 比之前各自單獨跑的效果好

(模型1 +模型2)/ 2

截圖自 Coursera

可以改成加權, 看score 會不會更好 !

example : (模型1x0.7 +模型2x0.3)

截長補短, 各只取好的部分, 看score 會不會更好 !

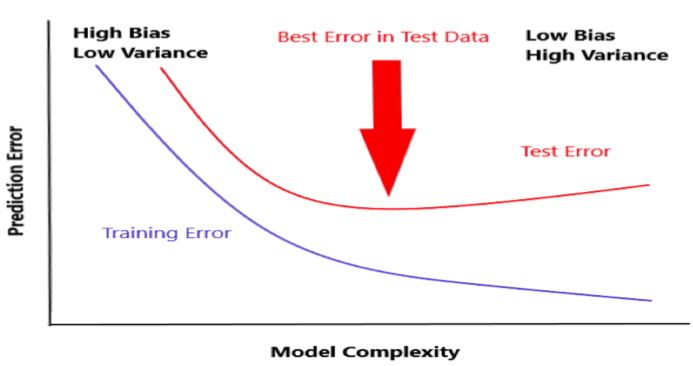

在建模過程會有兩個誤差來源

截圖自 Coursera

BagginfClassifier and Bagging Regressor from Sklearn

# train is the training data

# test is the test data

# y is the target variable

model=RandomForestRegressor()

bags=10

seed=1

# create array object to hold bagged predictions

bagged_prediction=np.zeros(test.shape[0])

# loop for as many times as we want bags

for n in range (0, bags):

model.set_params(random_state=seed + n) # update seed

model.fit(train,y) # for model

preds=model.predict(test) # predict on test data

bagged_prediction+=preds # add predictions to bagged predictions

# take average of predcitions

bagged_prediction/= bags

瑪博的 PHD 論文就是 ensemble methods, 他同時也是 kaggle 前世界第一.